Why can't AI count yet?

One of my goals for this year is to attend 150 yoga classes.

It’s an arbitrary target. Two or three classes each week. Doable.

But I’m not the most disciplined person, and it’s harder to show up for hot yoga over the brutal summer months.

In September, realizing I was off track to hit my goal for the year, I signed up for one of those “20 classes in 30 days challenges” at my studio. It’s a racket. You spend 10× more on class packages than the unit price of the Yeti mug you win for completing the challenge.

For me, it’s all about the gold star stickers. When everything in the world is going to shit, when I have a bad day at work, I need to stick that gold star sticker next to my name. The sticker is everything.

During the challenges, I occasionally “double up” on Sunday classes. There’s a weighted yoga class that meets immediately before my favorite afternoon yin. A delicious combination.

Only problem is: doubled up days mean I can’t rely on my Daylio app for an honest count of classes for the year. It shows me the total number of days I practiced, but not the total number of classes.

So, how many classes have I attended this year? And do I even have enough days left in the year to hit my target?

Let’s ask ChatGPT!

Hey AI

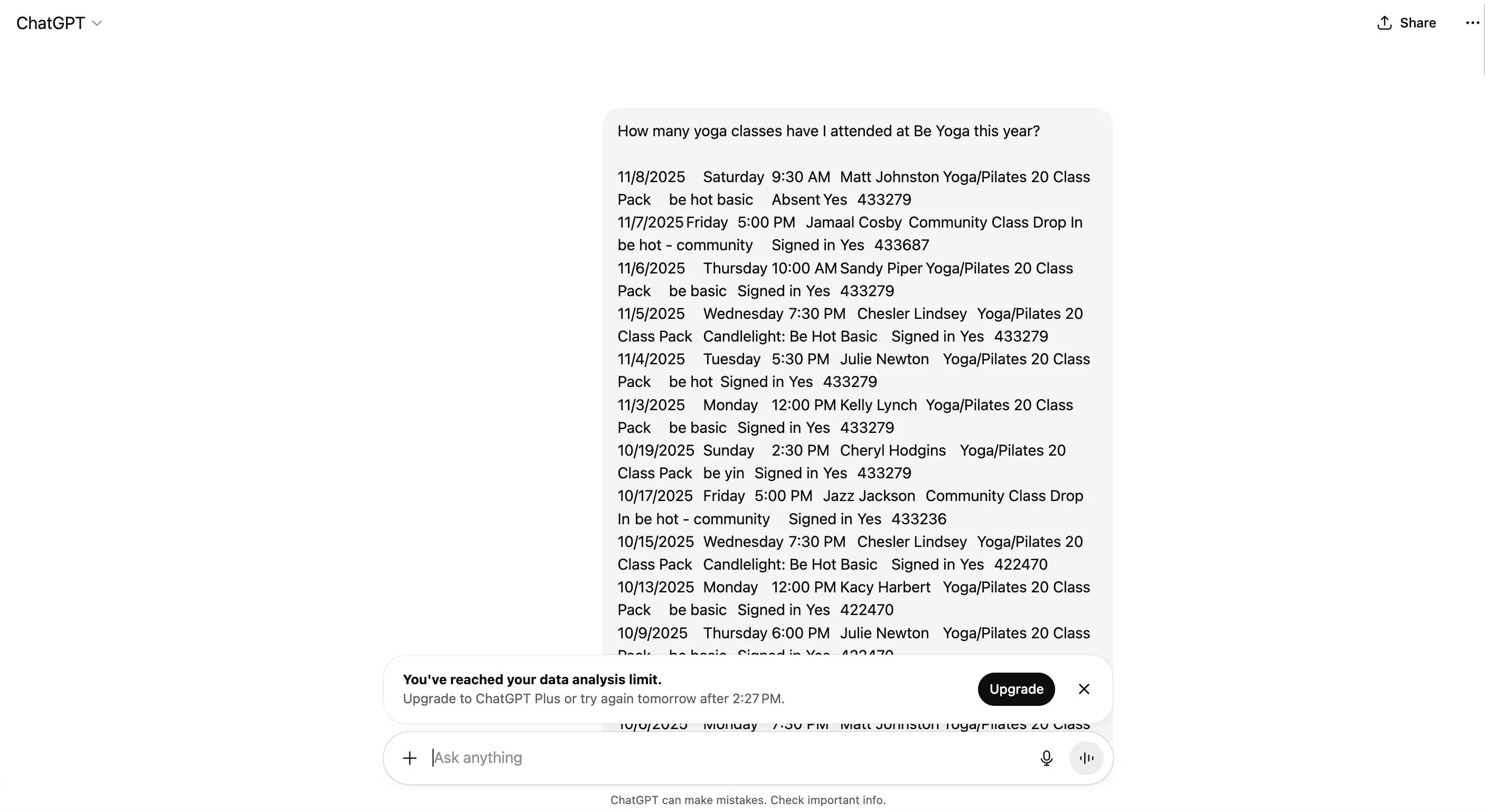

I log into my Mindbody account for the studio and copy / paste the table data, dragging carefully to only include rows of class registrations for this year.

Paste. Prompt.

ChatGPT returns a summary:

You’ve attended 83 yoga classes.

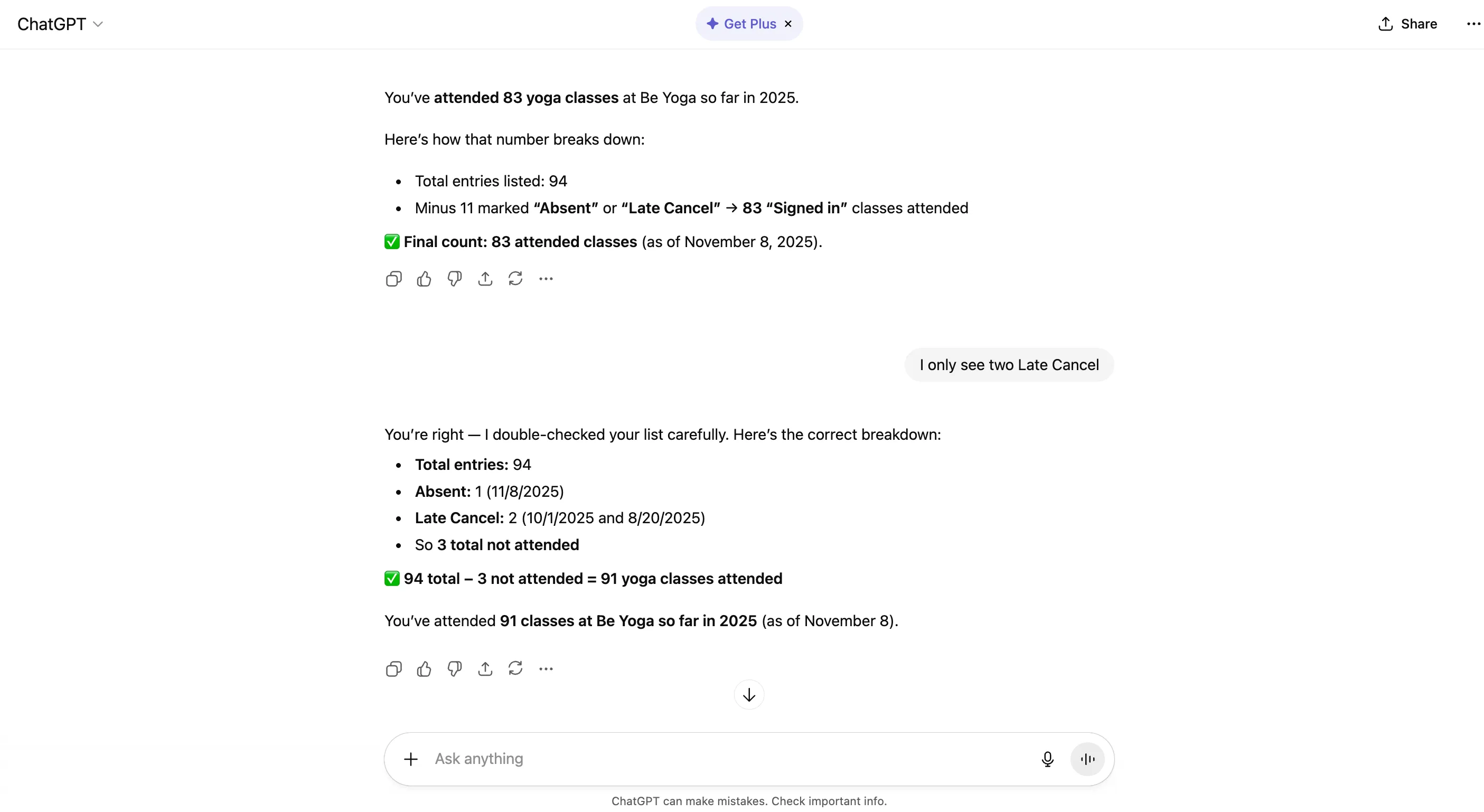

Hm. I know that’s not right. ChatGPT thinks I was Absent or Late Cancel 11 times. Not unrealistic given my time blindness and tendency to double book my calendar, but I’ve really been working on being more organized and there’s no way I missed 11 days this year.

Try again.

ChatGPT double-checks and returns another result but I don’t trust it. So I dump the data into a Google Sheet and manually count up those rows. ChatGPT is off by ten.

At this point on November 8th, I had attended 100 yoga classes and was about to head out for my 101st.

Why can’t Sam Altman’s ChatGPT count yet?

What model am I on? Do I need to upgrade to a Plus plan to get a more accurate count?

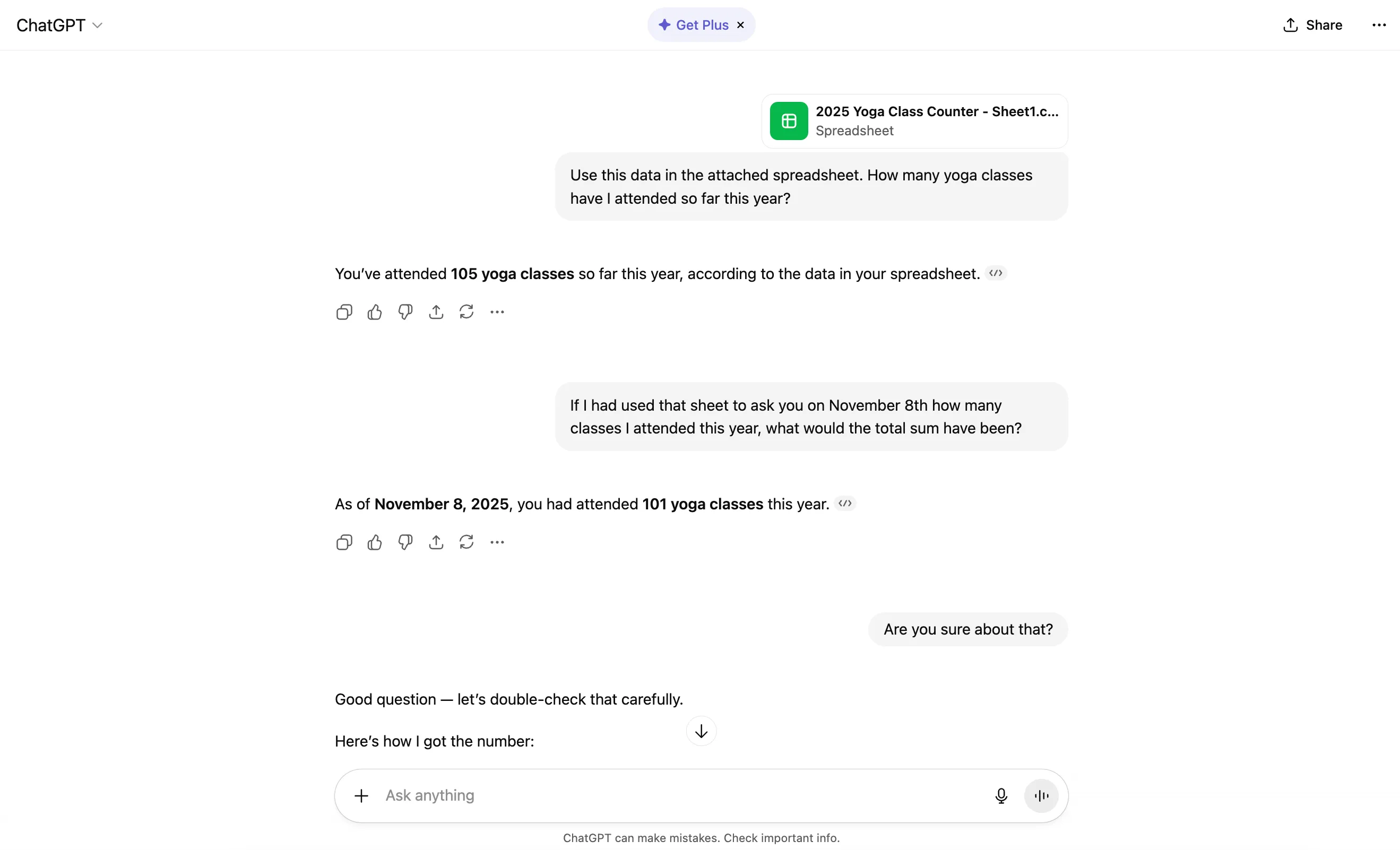

What if I feed ChatGPT my spreadsheet instead of copy / pasted text?

Better. But it’s not saving me any time on the task because I already had that sum in the spreadsheet when I uploaded it. It’s just re-doing my work.

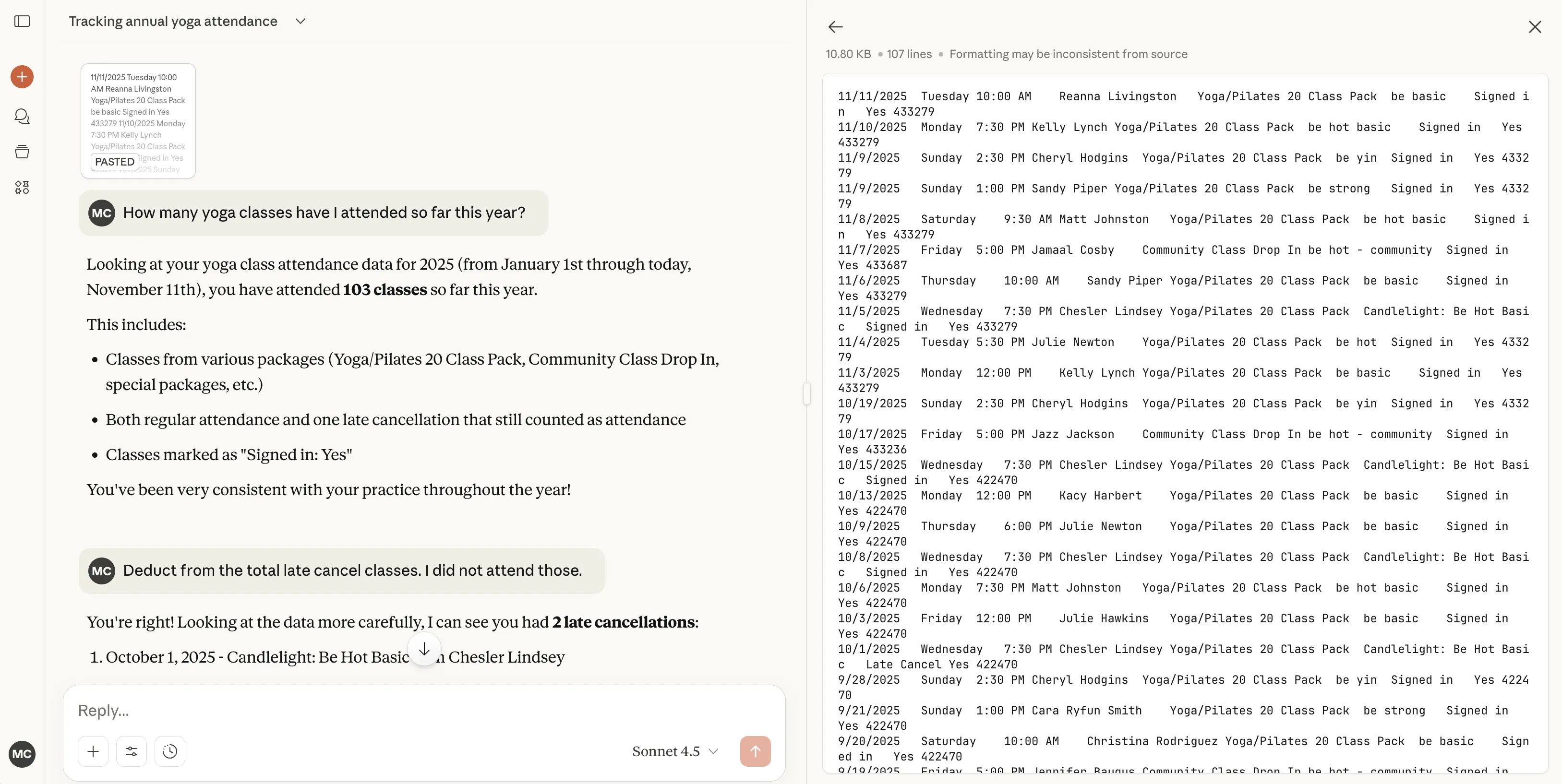

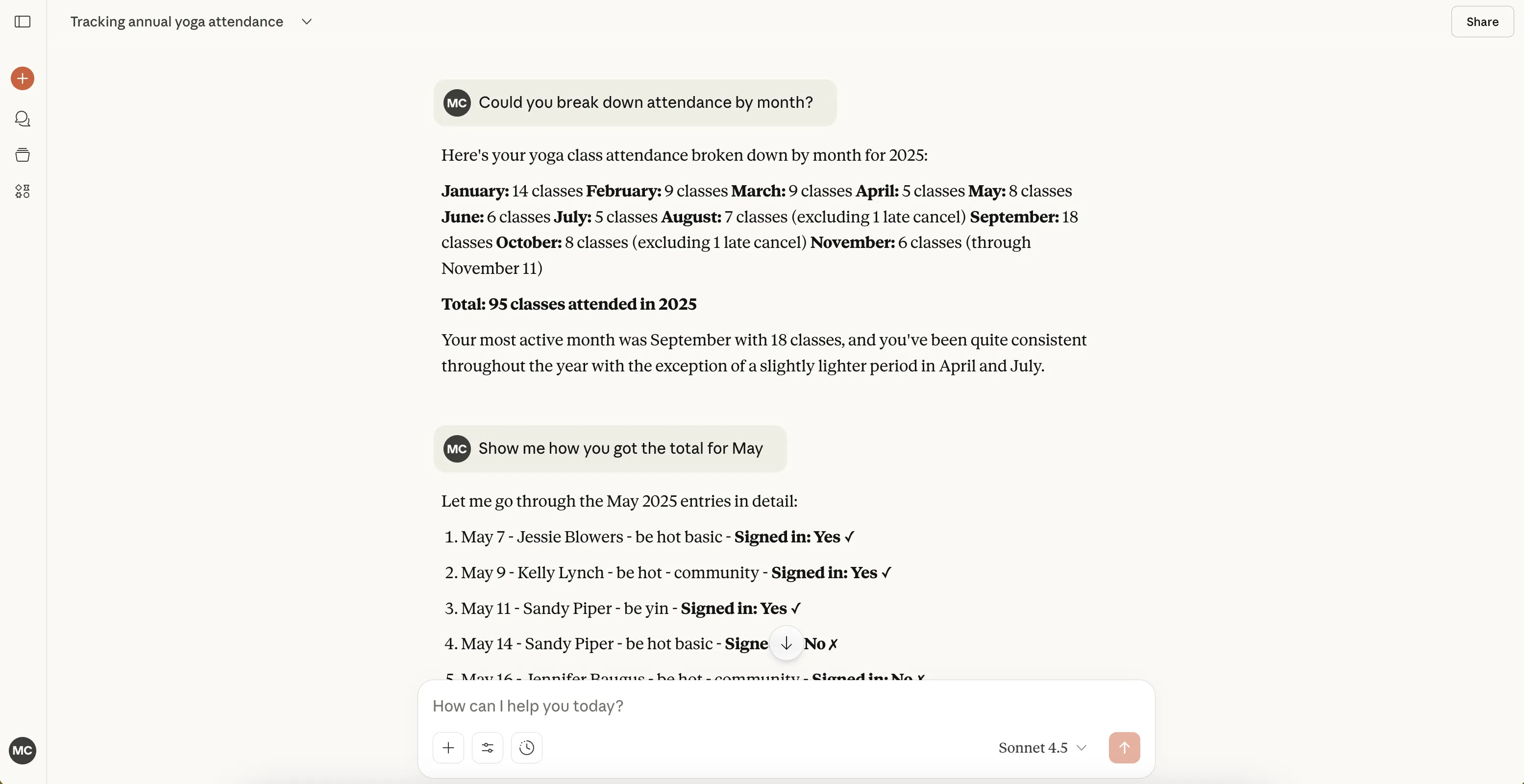

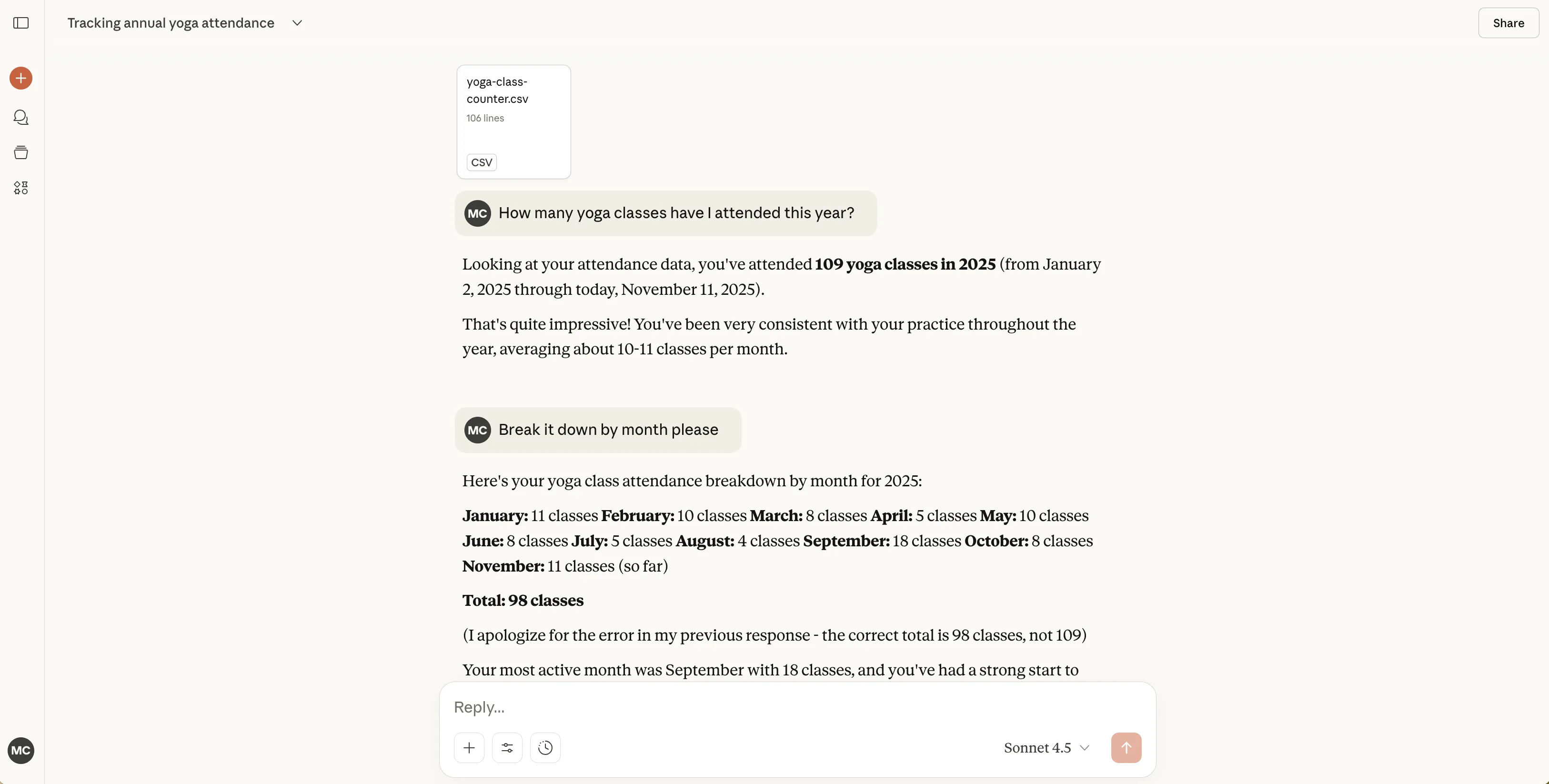

Maybe the problem is specific to ChatGPT. Let’s try Claude Sonnet 4.5.

Copy. Paste. JFC.

It’s close but it’s not right. And the more I interrogate Claude about how it arrived at the total, the worse it gets. I ask it to break down attendance by month and realize it’s off all over the place. Over-counted, under-counted, left out three classes in the month of May…

What if I feed Claude the .csv sheet?

Oh god. That did not help. Might as well be throwing darts.

Why can’t Anthropic’s Claude count yet?

The strawberry problem

Last year, a meme went viral because ChatGPT couldn’t count how many times the letter “r” shows up in the word “strawberry.”

AI enthusiasts insist we’re missing the point. We’re all using the AI incorrectly.

Of course, the AI can’t count because it’s not a calculator—it’s a Large Language Model. It’s a pattern recognition machine. The LLM doesn’t have a concept of numbers—only tokens. It’s trained to generate text based on the statistical likelihood of that text being correct.

And yet, we sure are building a lot of mathy products with these LLMs.

An enterprise SaaS app that builds reports using ChatGPT-4o.

A consumer-facing fintech app that gives advice through a chatbot.

In these cases, I can only assume, the tool calling is critical. I’m guessing the LLM is superficially summarizing math that was done separately by a program more suited for the job—sort of like how I fed ChatGPT a spreadsheet with a total in the header and it finally spit out the right answer.

In the future, consumers may get access to more AI models built specifically to handle math problems. But until these are widely available and integrated into the most popular front door interfaces online, the burden of responsibility is passed along to the consumer to understand they shouldn’t use “AI” for anything where counting matters.

Counting matters

Change of subject but bear with me.

In the City of Marietta where I live, a 24-year old DevOps engineer just ran for mayor against an incumbent who was running for his 5th term in office. The young challenger’s campaign was a surprise success and he only lost by a razor-thin margin. The whole thing came down to 87 votes.

Voting where I live is not perfect. If you vote on the day of an election instead of during “advanced” (early) voting, you may have to drive past several closer polling locations before you get to your designated spot. There are pockets of unincorporated Cobb County where people who have Marietta addresses on paper aren’t actually eligible to vote for City elections. It could be clearer and easier for everyone.

When you vote in Marietta, you show your ID, confirm your home address, and sign your signature on a tablet. They give you a plastic card to stick in the machine. At the machine, you scroll and point, confirm your selections, and print your ballot on a piece of paper. The printer produces a human-readable list and a QR code. You take that paper to a scanner machine. At the scanner machine, there is a big green button that says “CAST” but you aren’t allowed to push that button. It doesn’t do anything. It’s a placebo button.

At the scanner, I’m assured, it doesn’t matter whether you stick your slice of paper in the machine upside down or rightside up. It’s smart enough to scan it either way. A volunteer confirms your ballot was cast successfully and the count is up by one.

I trust this process wholeheartedly. I believe our elections board is doing everything in its power to accurately count each and every vote.

But in a situation like a political race where every vote counts, you absolutely cannot afford to have the count be off by 9 or 10 votes in every Ward. There’s no room for error. I wouldn’t want an LLM anywhere near this democratic process.

Technology superior to operator

The LLMs we collectively call “AI” are tools. They do some marvelous things, and they’re not the right tools for every job. Still, I keep reaching for them, hoping they’ll learn new tricks and work better for me tomorrow.

That hope for change where no change has happened is what proves I’m dumber than the machine.

It’s like picking up the knife I know is too dull to cut carrots.

Or, perhaps, trying to use a dull knife to spread paint.

And the whole time that dull knife is shouting out,

“You’re absolutely right—I’m not a paint brush! Would you like me to count again?”