AI in daily life

It’s been two years since I started working on my first AI product launch. It’s also been about two years since I started playing with AI as a consumer.

I thought it might be a good moment to write down my thoughts on the topic. The technology is advancing rapidly, and I imagine it will be fun to look back on this post in a few years.

I am by no means an expert in the field. I wouldn’t even describe myself as a power user. This isn’t a heavily researched post. This is what I’d say if a friend or family member asked me, “Hey, you work in tech. What do you think about this AI stuff?”

The best AI doesn’t feel like AI

A lot of AI is infuriating because it feels interruptive and shoe-horned. For example, the little AI helper in Google Docs always seems to be in the way when I’m trying to leave a comment.

Not now, sparkly buddy!

But my favorite interactions with AI aren’t about AI at all. They’re just magical features within products I use that happen to be powered by AI behind-the-scenes. In these cases, AI is an implementation detail and not the headline. The benefit of the feature is more interesting than whether or not there’s machine-learning involved.

Examples:

- Automatic Image Cleanup: When I upload a photo of my favorite necklace to my digital closet in Indyx, the app uses Photoroom to automatically remove the background around the jewelry

- Remove Filler Words: Descript automatically scrubs all the “uhhhs” and “ummmms” from my video recording so I don’t have to cut them out one by one

- Live Voicemail Transcriptions: My phone automatically converts a voicemail message into text I can read, so I don’t have to find a quiet moment to play back the message and listen

These little conveniences are so nice. They make me glad AI is here to stay!

AI for coding is helpful until a point

As somebody who likes to code for fun but who isn’t particularly good at it yet, I find AI tools for coding are helpful for getting unblocked.

The way I typically learn how to code is by referencing an example, like a template or an existing app, and then changing and adjusting that boilerplate until it works the way that I’d like. Along the way, if I get stuck, I might go visit the docs or Google a solution.

To the extent that copilot tools can:

- Bootstrap templates for me

- Bring relevant docs examples into my workspace

- Suggest the right command or line or code when I get stuck

I find this to be a net positive. I am cautious not to run commands or merge in changes that I don’t understand. And because of the nature of my personal projects, the stakes are low if something goes wrong.

However, my husband, who is a much more seasoned programmer, has had a different experience. He’s been impressed with GitHub Copilot for writing tests and he’s playing with AI suggestions as he works. But he sometimes describes feeling stuck in the interface with the AI mode getting in the way.

For instance, he wants to edit and adjust a specific code suggestion but he can’t do this until he accepts all suggestions made by AI.

It seems like there’s still work to smooth over the experience for engineers co-writing (co-coding?) with AI.

AI for prototyping

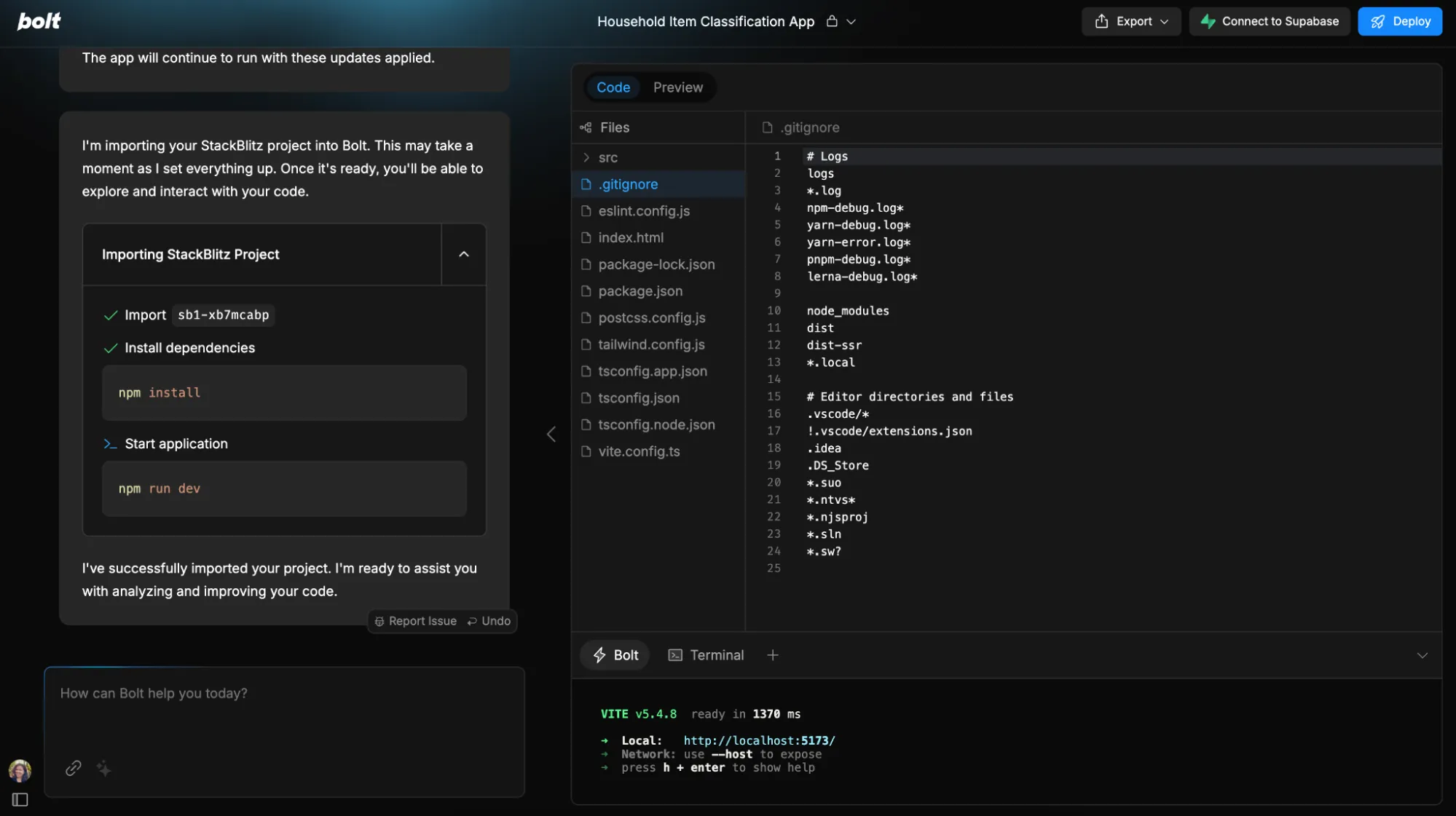

Up until now, I haven’t had much interest in “no-code” app builders, but I am intrigued by tools like Bolt where you can quickly build while also having the generated code right there to read and inspect.

A combination chat UI with code in bolt.new

A combination chat UI with code in bolt.new

Last week, I had virtual coffee with a founder who’s switching from Bubble to Lovable and rebuilding their app from the ground up.

I imagine I could get pretty far in terms of prototyping a concept of an app with an “idea to code” app. But, I’m skeptical of whether I could really build a launch-ready product on my own with one of these tools acting like my first engineering hire. At some point, I’d probably want to tag in a real human engineer with expertise to make sure my database is secure and setup to scale.

The novelty of ChatGPT

I’m conflicted about my ChatGPT Plus subscription. Setting my personal political positions aside, I’m still not convinced the consumer-facing app delivers measurable value. (When the OpenAI models are powering features in other services, that’s a different story.)

I’m spending a lot of time playing with ChatGPT because I think it’s the most widely adopted AI app for everyday people, and I want to be plugged into the experience millions of people are having at this stage in the game. I think ChatGPT might be Google in a sea of Bings, but there’s a decent chance it’s actually Yahoo! and I’ll move on over to whatever AI juggernaut picks up steam next. (What’s up, Anthropic?)

Pleasant surprises with ChatGPT

Here are some use cases where I’ve been pleasantly surprised by ChatGPT:

-

Overcoming writer’s block: If I’m psyched out about what marketing headline is going to work best and everything starts to look like crap on the page, I like asking ChatGPT for ideas. I don’t usually use its suggestions as-is, but it’s a good challenge to see if I can write something better.

-

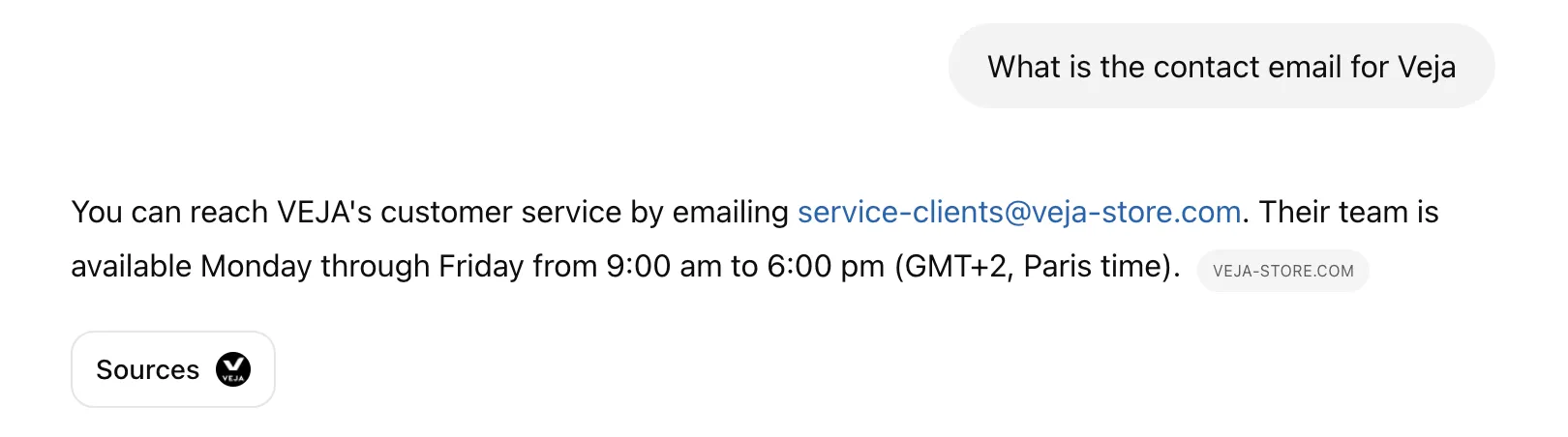

Reaching unreachable customer service: The other day I got stuck in a customer service loop trying to figure out how to return a pair of sneakers. The localized US version of the website kept pointing me to an FAQ that didn’t have the information I needed, and the web forms were too restrictive with preset options that didn’t match my problem. I asked ChatGPT how to contact a human and it spit out the correct address right away.

-

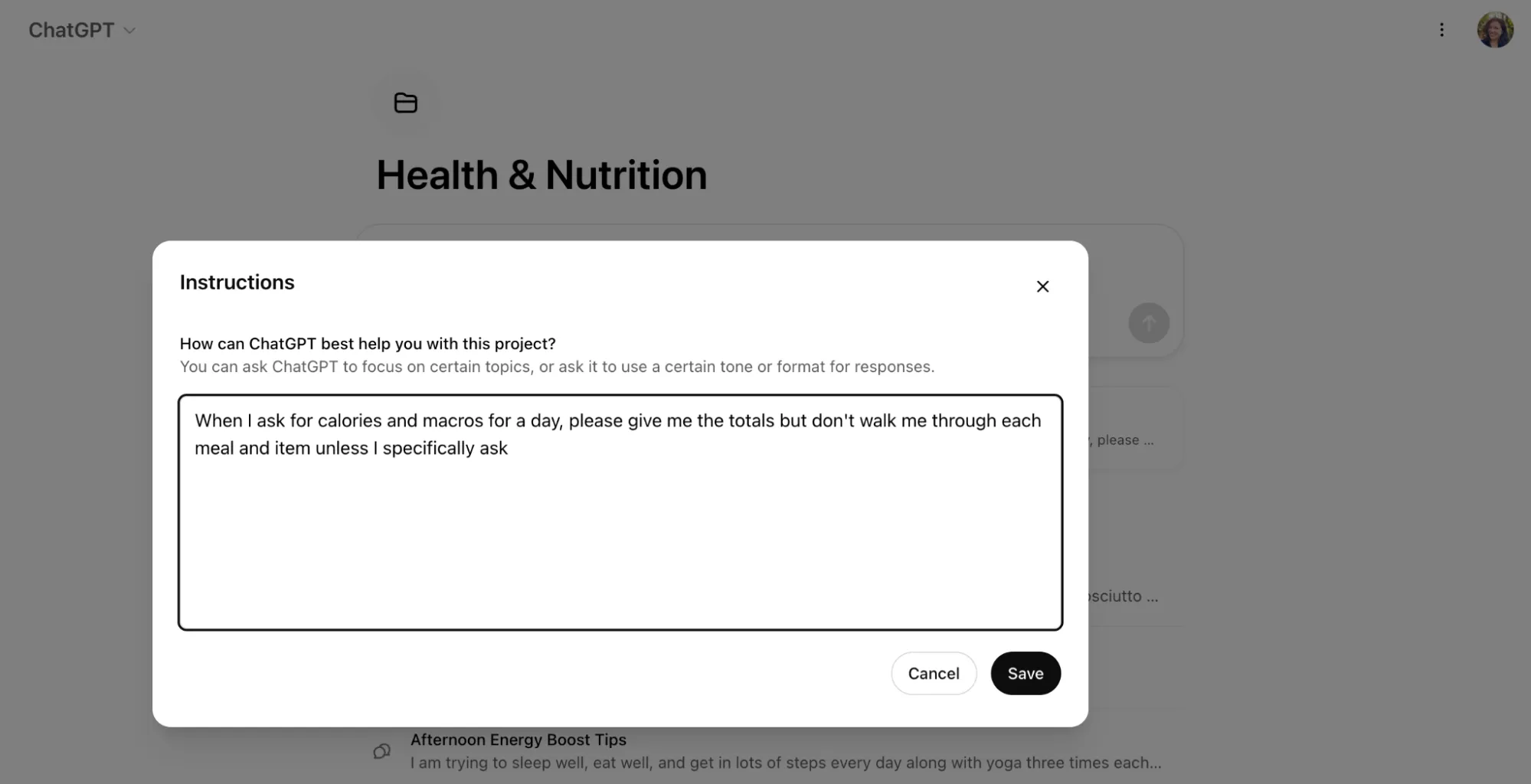

Health and nutrition analysis: As I’m getting older, I’m a lot more concerned about my health and nutrition. But I find diet-focused apps to be extremely triggering and bad for my mental health. I like that I can bullet point list everything I ate for the day and ChatGPT can give me a summary of my macros and calories without any judgement or shaming. In this case, the obscurity and lack of precision is a benefit. I don’t want to obsess about the calories in a croissant. I just want to know how I’m doing overall. This is nice!

While these use cases are all entertaining, I’m mostly playing with ChatGPT to see what it can do. If ChatGPT went down, my productivity wouldn’t be affected much. I could have used Google Search’s AI Overview to get that help desk email. For a lot of these workflows, I might not even seek out an alternative app.

ChatGPT let-downs

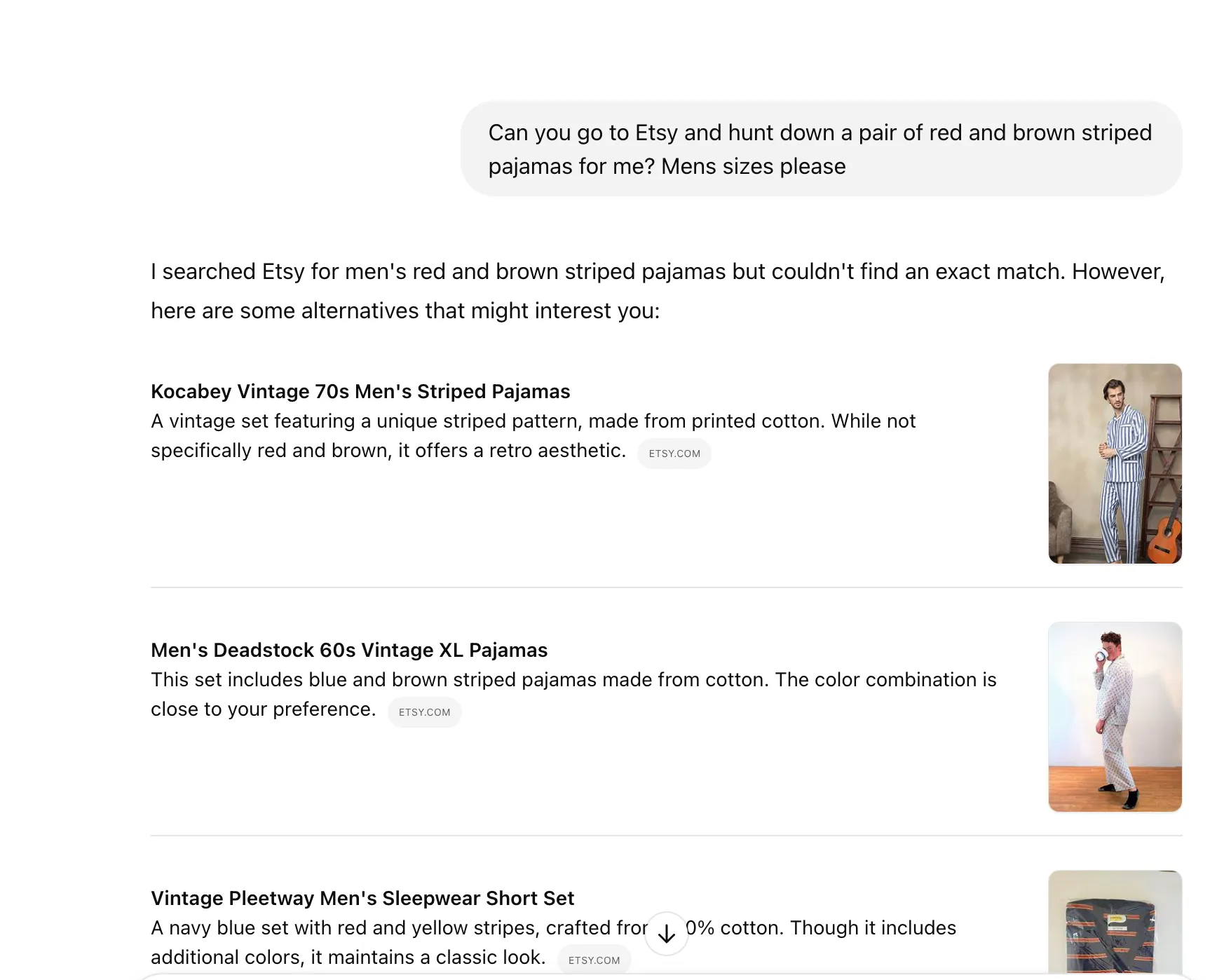

- Shopping recommendations: When I ask ChatGPT to help me hunt down a specific item, like a pair of red and brown striped mens pajamas, it falls down on the job. It searched Etsy and came back with a bunch of blue striped pajamas, whereas when I visited Etsy and typed in the query, I found an exact match in ~4 seconds.

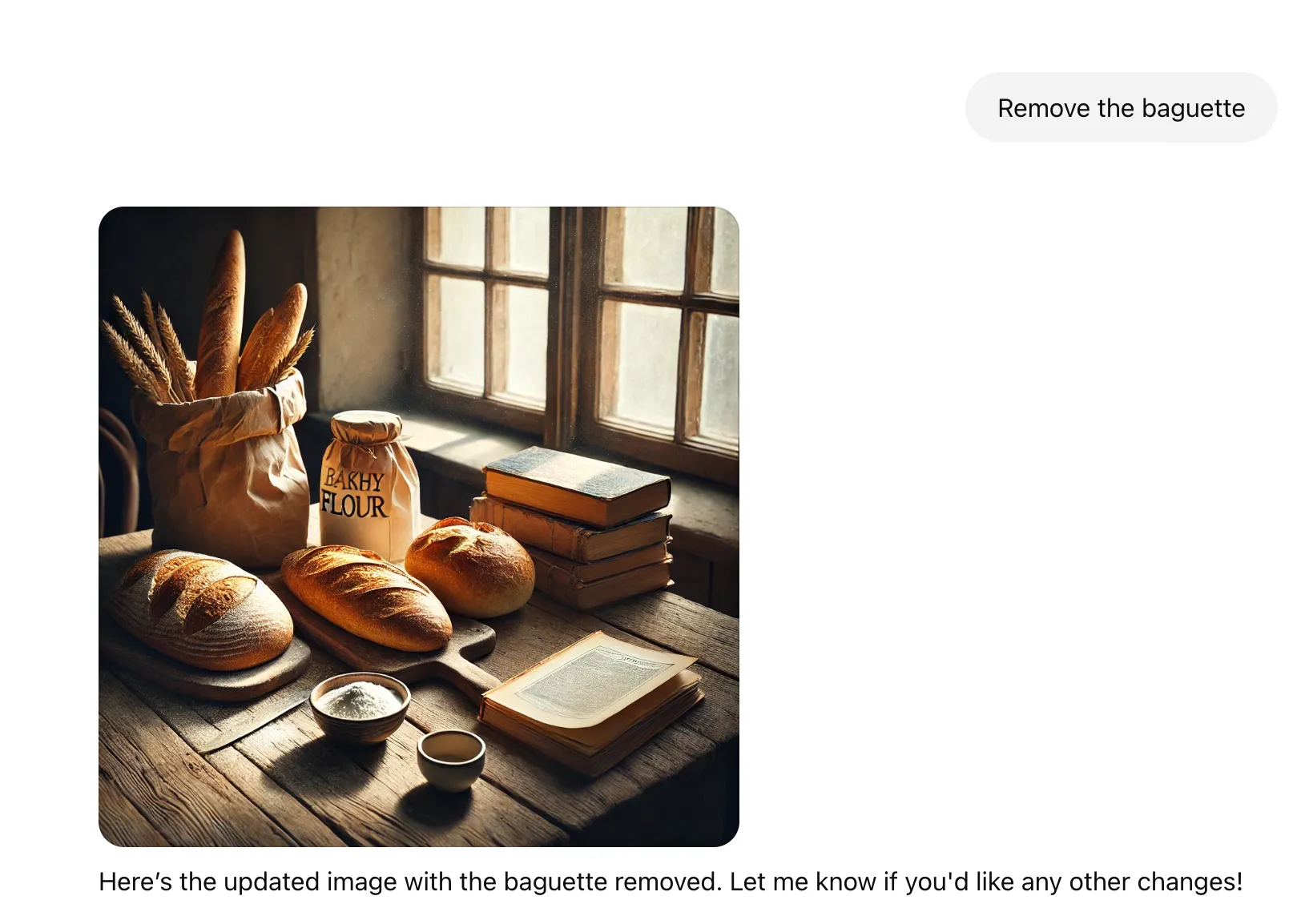

- Image generation and refinement: DALL-E cannot follow instructions. The image results it spits out are amusing but unusable. In this example, I ask ChatGPT to generate a bag of flour but for some reason it sticks some wheat and an entire baguette into the “floir” bag. When I ask ChatGPT to remove the baguette, it returns an image with two baguettes—plus some breadsticks? Don’t even get me started on the nonsense text “Bakhy Flour” on the jar it added to the scene.

Speaking of nonsense…

Wading through AI slop on the dead internet

Image generation seems like a particularly hard thing for the most accessible AI to execute.

When my iPhone auto-updated last night, I got a new app on my homescreen called “Image Playground.” I asked Apple’s AI to generate an image of a “funny cat.” The only thing funny about this cat is that it has two tails.

Then I asked the Image Playground (Beta) to generate an image of a “Flaky Southern-style biscuit with jam.” Apparently the training data came from the UK because that’s not a biscuit. That’s a sandwich cookie.

Two images generated by Apple’s Image Playground (Beta)

Two images generated by Apple’s Image Playground (Beta)

The problem is this type of low-quality AI-generated content has completely polluted the social media platforms where we share and consume information.

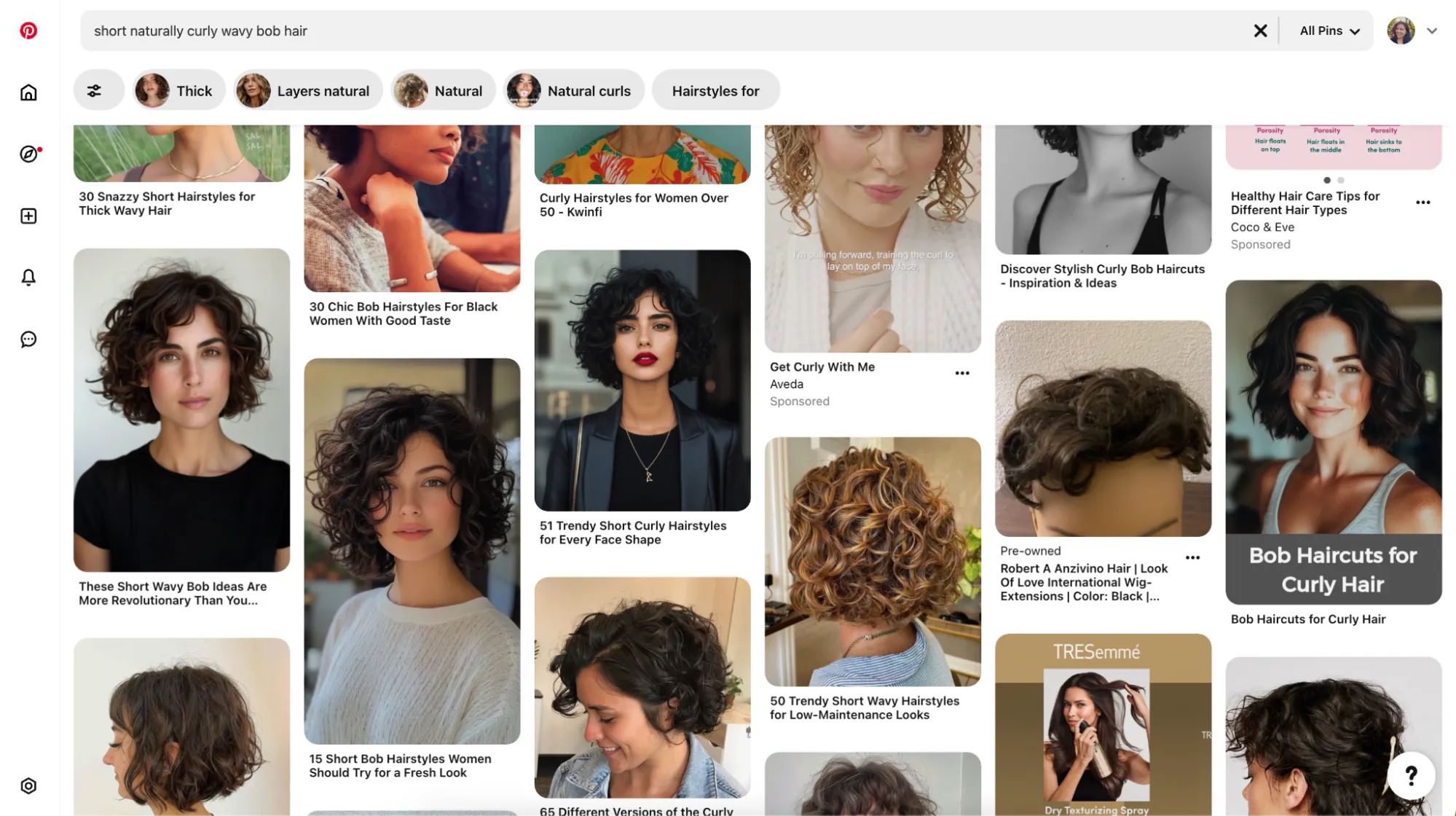

For example, if I want to look up some haircut inspiration to share with my stylist as a reference, half the images that come up on Pinterest aren’t just photoshopped—they’re not real people.

If I search for “mediterranean recipes,” the first result is a Greek Chicken Bowl featuring dill fronds generated by Midjourney. Now I’m wondering if this recipe has even been tested in a real kitchen.

On LinkedIn, thought leaders are sharing unintelligible AI-generated infographics with garbled text.

If you’re posting stuff like this on LinkedIn, I am disappointed in you.

If you’re posting stuff like this on LinkedIn, I am disappointed in you.

Over on Facebook, AI-generated content keeps popping up in my feed.

Apparently, there is something worse than the offensive chain letter going around your grandfather’s Sunday School class listserv and that something is thousands of Boomers putting a heart emoji on the AI slop in their social media feeds.

Typical posts include:

- Fake “French Country Cottages” with maximalist decor decisions that defy the laws of physics

- AI-generated images of TV and movie characters, like “What if Matthew Crawley didn’t die on Downton Abbey? Here’s what Mary’s babies would have looked like!”

- “Nobody will like this” posts with AI-generated photos of elderly couples, then and now, with hairstyles and timelines that don’t line up, like some deranged back alley knock-off copies of Carl and Ellie from Up

That Gam-Gam is NOT REAL.

That Gam-Gam is NOT REAL.

Some of these fake posts generated thousands of likes and comments.

Why are there 7,000 likes on this fake image of psychedelic marigolds??

Why are there 7,000 likes on this fake image of psychedelic marigolds??

It would be bad enough if the situation was: most people are gullible and have bad taste.

Heck, I’m one of the most gullible people I know. I’ve certainly been mesmerized by some aesthetic GenAI video content before.

But the reality is weirdly worse. What’s most likely is half the “people” posting and engaging with this content aren’t real consumers like me and you. They’re fake accounts attached to AI bots. What used to be click-farm labor has been automated by AI.

When a post goes live to Twitter, marketers can buy buckets of bots to like their post, leave comments, game the algorithm and force their content to “go viral.”

The Dead Internet theory suggests that as much as 40% of the internet is fake. At some point soon, humans will be outnumbered by bots.

When most of the people posting on social media aren’t people at all, what will this mean for consumer behavior?

Will we be happy to buy lip gloss from an AI avatar on TikTok? How much more might we be willing to pay for vetted product recommendations from real people?

AI as a tool to discover beauty

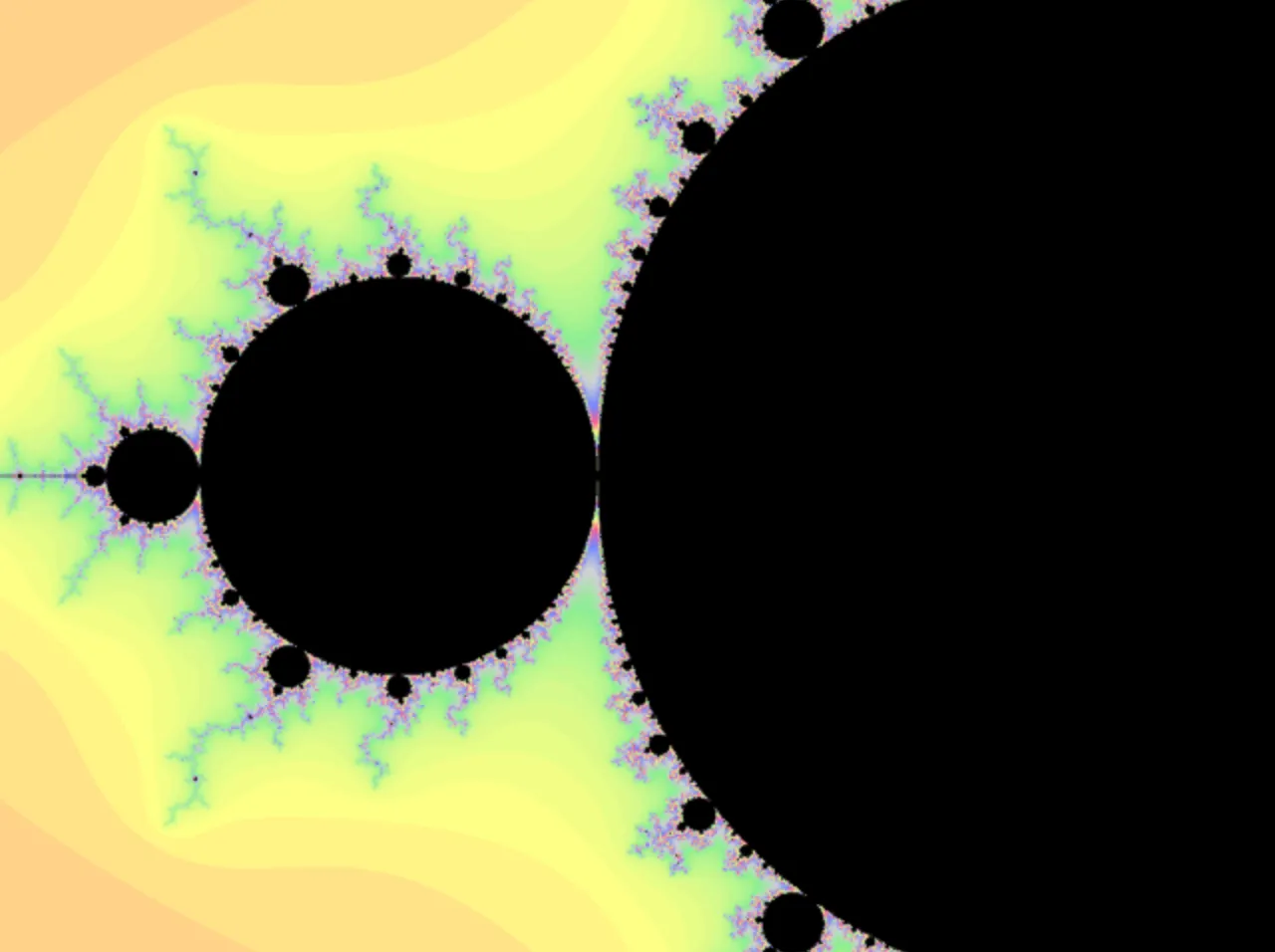

If most AI-generated content feels like slop, what makes some generative art so beautiful?

Visualizations, like fractal art that represents a mathematical formula, are fascinating to me. Because they start with math, they should feel cold but they actually feel organic. There’s a richness to math art because it’s reflecting back a pattern of nature.

An image generated with the Mandelbrot Set

An image generated with the Mandelbrot Set

Once a human creator starts to deliberately control the code to generate an image, we start to move from the realm of “visualization” to “creation.” This Motion Pictures piece is particularly interesting to me because it feels like you get to co-create this art with the artist. As you move the scrim around the block, you animate the image and bring it to life.

Source: Motion Pictures by Neel Shivdasani

Source: Motion Pictures by Neel Shivdasani

This morning I learned about a maker named “Max Hudson.” Based on what I can piece together, I think this artist is a real person who’s a software engineer and who has been dabbling in generative AI with Midjourney.

If a person like Max Hudson enters a prompt and uses Midjourney to create these dream-like landscapes, does this count as art?

Source: Max Hudson

Source: Max Hudson

It’s certainly tasteful. It’s pretty. It makes me feel isolated and disconnected, but somehow at peace. It reminds me of Indiana Jones.

If you were to post this generated image on LinkedIn, I certainly wouldn’t accuse you of posting slop. I’d probably hope you credited Max and his work!

Still, a lot of people are disappointed that the images Max creates aren’t original art. And apparently at some point Max created a whole separate account for works that are drawn by hand without any tracing.

Source: Max Hudson, @artof.mx

Source: Max Hudson, @artof.mx

If the image was inspired by AI-generated content but drawn by a skilled person, is it now free from the crimes of plagiarism?

Detractors vs. Zealots

Up until this point, we have not yet delved into any of the scary ethical concerns surrounding AI.

Vocal communities online tend to fall into one or two camps:

- AI Detractors – These folks remind us that all AI is theft. AI is already being weaponized and it’s a threat to lives and safety. It produces more garbage than utility. It can’t be trusted. It’s going to cause massive job displacement. (Negative.) It’s not worth the water it takes to run. We should regulate it or shut it down before it causes more harm to people and the environment.

- AI Zealots – These people want you to be afraid you’ll get left behind if you’re not all-in on AI. They care about how AGI is defined and whether or not we’re close to achieving it. They believe AI is part of our inevitable future. It’s going to cause massive job displacement. (Positive). It’s worth the risks because the potential profits are so enormous. We should fight to protect it against all regulations and let progress accelerate as quickly as possible.

The Detractors are correct but optimism is contagious, and the Zealots will win out.

We cannot put AI back in Pandora’s box. And we shouldn’t attempt to save every job that AI aims to replace. Let AI handle the boring work. We can find more meaningful occupations.

The question is: how can we stay creative and vigilant to reduce harm and protect eachother in a world where the prevalence of AI is accelerating?

AI is dangerous because people are dangerous

One thing about humans is: we always find a way to turn new tools into weapons.

Whether it’s nuclear fission, a car on the highway, or the Facebook algorithm, technology becomes dangerous because people are dangerous. In our 200,000 years on this planet, we still haven’t figured out how to stop destroying one another.

After all, we are all Very Busy. There is so much important and mysterious work to do.

After all, we are all Very Busy. There is so much important and mysterious work to do.

In a lot of ways, AI shares the original sins of the internet in general. It promises convenience and information while being a conduit—even a force multiplier—for our worst human traits.

Even when it’s not being weaponized, AI is somewhat Less Good than The Internet because AI sits outside of the web of genuine human connection. It’s a notch further away from the truth.

It cannot bring two or more people together to share moments and first-hand information, the way the internet can. You could feed AI the entire world’s worth of knowledge and it still wouldn’t be able to report an honest human experience. It can only talk around it.

Synthesis is not observation. Analysis is not feeling. Intelligence is not inspiration.

Finding our most human parts

Who might we become as we seek to differentiate ourselves from the machines that sound like people but are not people? How will AI change the way we define and value human life?

When the currency of your IQ crashes to zero and your personal productivity has no meaning, what hopes for your future might justify the pain of being born?

Will we finally heal and recognize the inherent worthiness of every person?

Or will we return to brute strength, resuming the endless fight to win at the tragic game of Earth?